Magnetic resonance (MR) images are often used for image interpretation, registration, segmentation, lesion tracking, and more. These processes require the human anatomical regions of the scans (head, breast, abdomen, knee, etc.) to be known in order to apply the appropriate and relevant algorithm, segmentation atlas, previous scans and segmented lesions. However, the basic information about the anatomical regions of these scans, or exam group, stored in the DICOM meta data are sometimes missing, mistyped or unique to the institution. A more robust approach is to use the image data of the scan to identify the exam group and apply a standard naming convention.

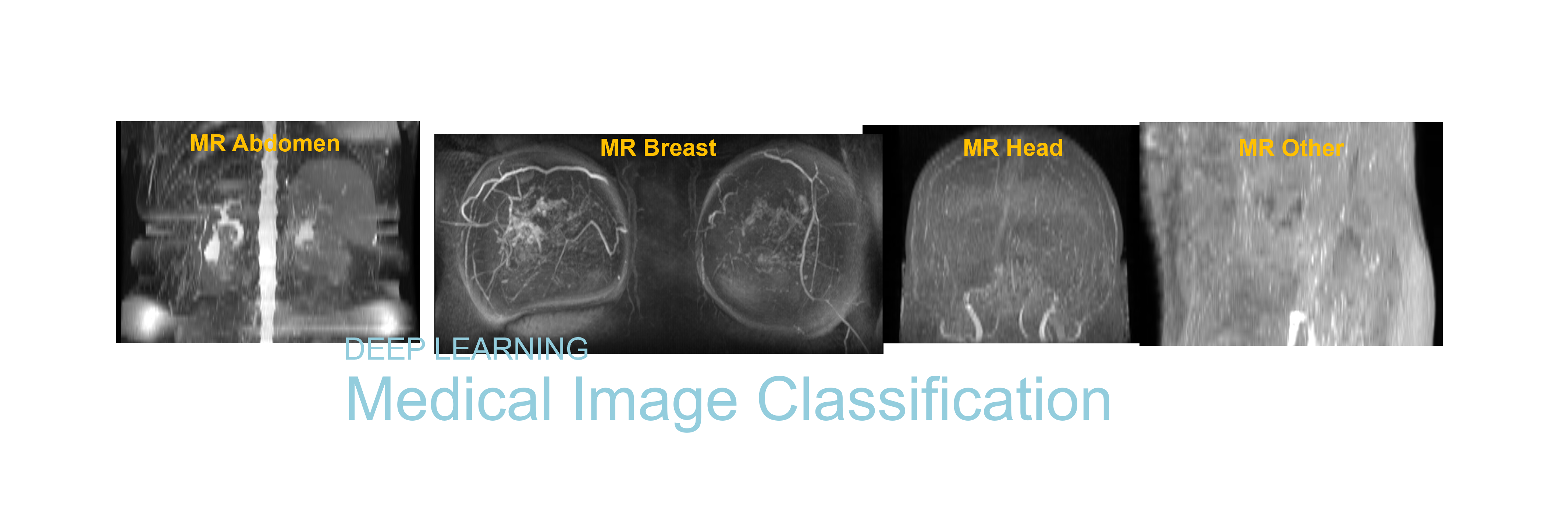

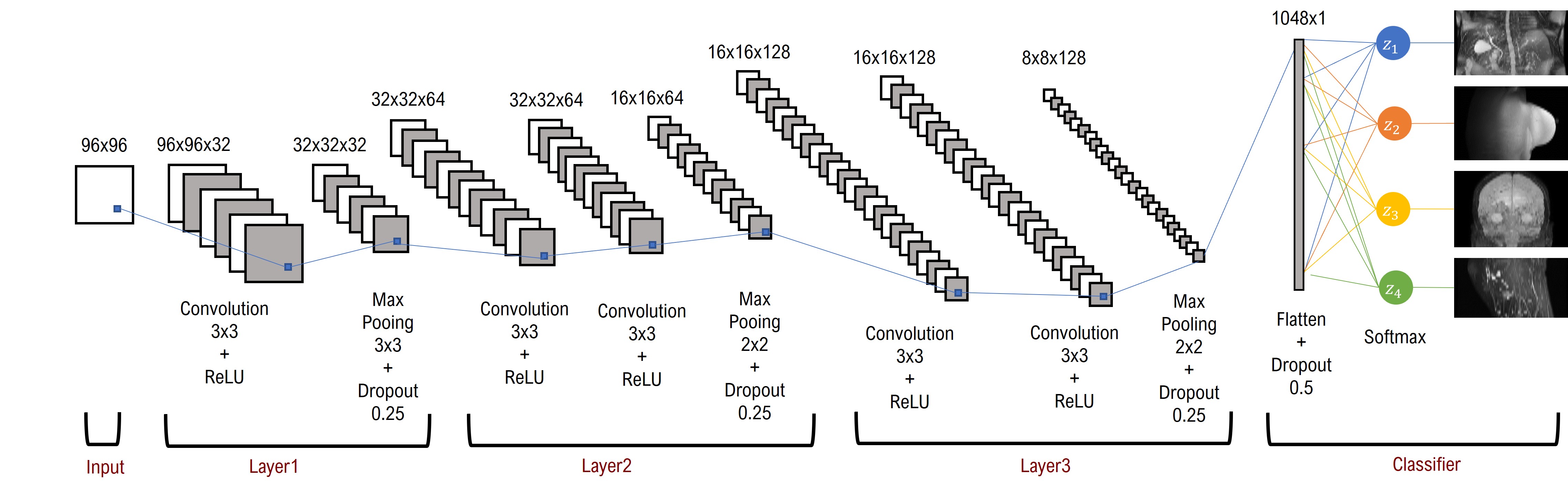

As exam group identification is essentially image classification, supervised learning and convolution neural networks (CNN) techniques are used. MR scans are grouped into four MR exam groups, MR_Head, MR_Breast, MR_Abdomen and MR_Other. MR_Other consists of any MR that do not belong to the other 3 groups, such as neck, shoulder, spine, knee, etc. For each of the 4 MR exam groups, maximum intensity projection (MIP) images along the superior-inferior axis are created to simplify the 3D MR image volumes. These projected 2D images serve as the input data used for deep learning training.

Convolutional neural network for exam group classification of MR images

Contact us for more information.